Nvidia has launched a comprehensive, industry-defining autonomous vehicle (AV) software platform to accelerate the large-scale deployment of safe, intelligent transportation innovations for auto makers, truck manufacturers, robotaxi companies and startups worldwide.

The full-stack Nvidia Drive AV software platform is combined with Nvidia’s accelerated compute, designed to provide the industry with a robust foundation for AI-powered mobility.

Full-stack software approach

Nvidia Drive offers a modular and flexible approach, allowing customers to scale by adopting either the full technology stack or selected components based on their requirements. The software architecture supports real-time sensor fusion and continuous improvement through over-the-air updates.

Its scalable design enables auto makers to implement selected advanced driver-assistance features – such as surround perception, automated lane changes, parking and active safety – for Level 2++ and Level 3 vehicles, while providing a path to higher levels of automation as technology and regulations develop.

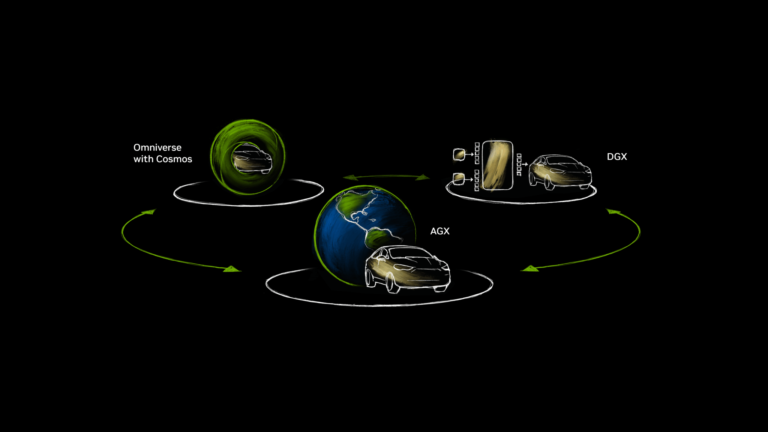

Adding to the AV software is Nvidia’s three-computer solution, which spans the entire AV development pipeline and is designed to tackle the challenges associated with the safe deployment of autonomous vehicles at scale.

The three computers include Nvidia DGX systems and GPUs for training AI models and developing AV software; the Nvidia Omniverse and Nvidia Cosmos platforms running on Nvidia OVX systems for simulation and synthetic data generation, enabling the testing and validation of autonomous driving scenarios and optimization of smart factory operations; and the automotive-grade Nvidia Drive AGX in-vehicle computer for processing real-time sensor data for safe, highly automated and autonomous driving capabilities.

Embracing generative AI

Most traffic accidents are linked to human factors. AI-driven systems are poised as a solution to improve road safety, but building an autonomous system that can safely navigate the physical world is challenging. AV software development has traditionally been based on a modular approach, with separate components for perception, prediction, planning and control. Nvidia Drive presents a unified approach, using deep learning and foundation models trained on large datasets of human driving behavior to process sensor data and directly control vehicle actions, eliminating the need for predefined rules or traditional modular pipelines. This enables vehicles to learn from vast amounts of real and synthetic driving behavior data to safely navigate with human-like decision-making.

The Nvidia Omniverse Blueprint for AV simulation supports the development pipeline by enabling physically accurate sensor simulation for autonomous vehicle training, testing and validation. When used alongside Nvidia’s three-computer solution, it allows developers to convert thousands of human-driven miles into billions of virtually driven miles, enhancing data quality and supporting the efficient, scalable and continuous improvement of autonomous vehicle systems.

End-to-end safety

Earlier this year, Nvidia launched Nvidia Halos, an end-to-end safety system integrating hardware, software, AI models and tools to ensure safe AV development and deployment from cloud to car. Halos provides guardrails for AV safety across simulation, training and deployment .

A key part of this safety framework is the Nvidia DriveOS safety-certified ASIL B/D operating system for autonomous driving.

With Halos and support for intelligent, adaptive sensors, Nvidia says the AV stack can deliver the tools, compute power and foundational AI models needed to accelerate safe, intelligent mobility.

Read about Nvidia’s partnership with Hyundai to accelerate the development of advanced AI technologies for mobility solutions