Researchers at Japan’s Ritsumeikan University have developed a network that combines 3D lidar and 2D image data to enable a more robust detection of small objects.

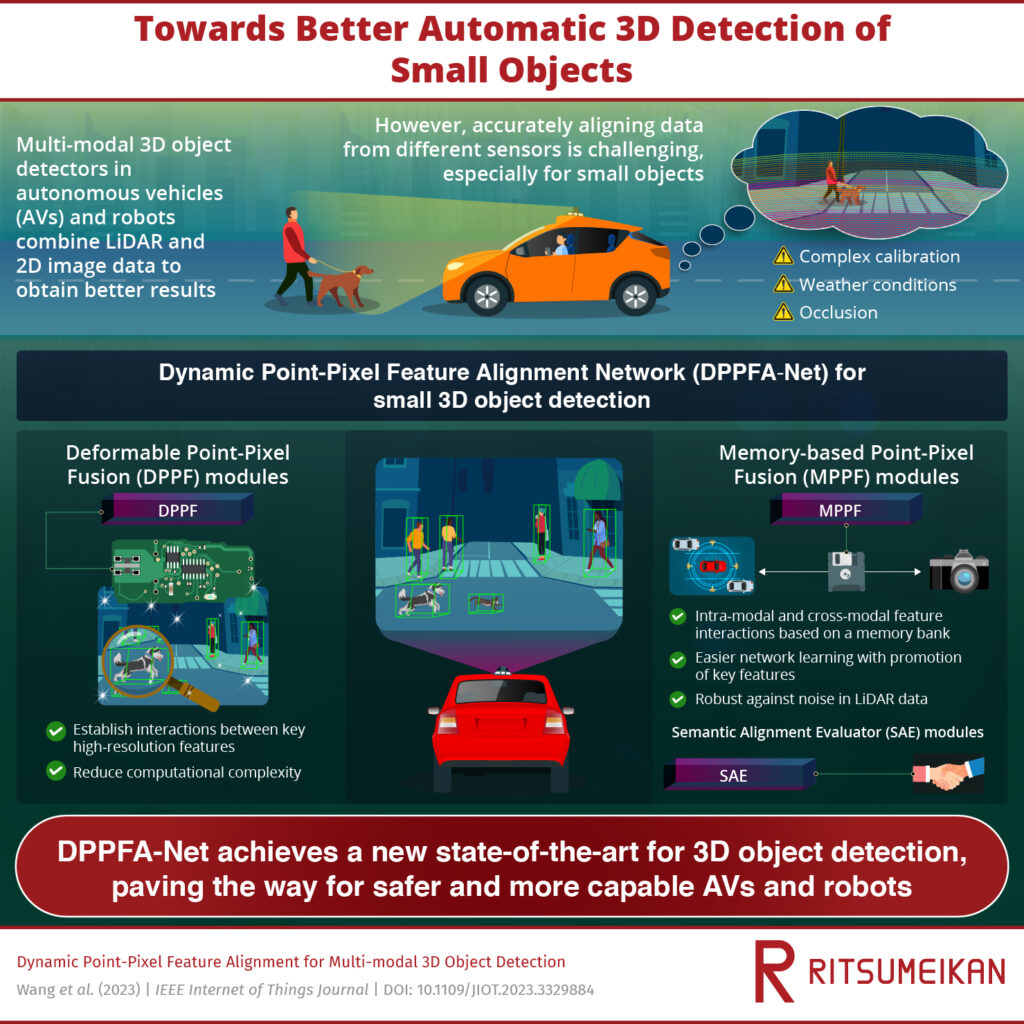

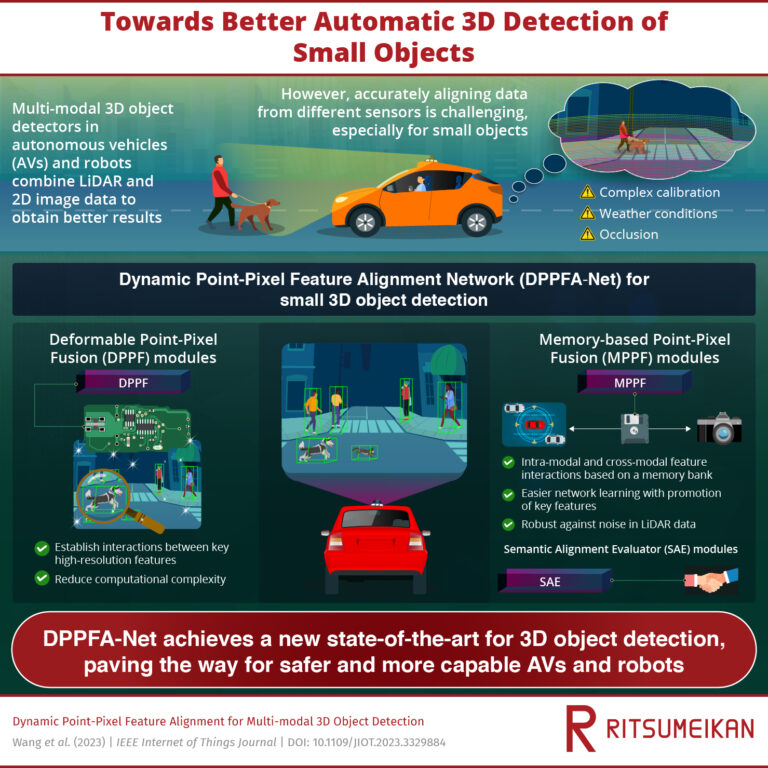

Robots and autonomous vehicles can use 3D point clouds from lidar sensors and camera images to perform 3D object detection. However, current techniques that combine both types of data struggle to accurately detect small objects. Now, researchers from Japan have developed DPPFA−Net, an innovative network that overcomes challenges related to occlusion and noise introduced by adverse weather. Their findings will pave the way for more perceptive and capable autonomous systems.

Robotics and autonomous vehicles are among the most rapidly growing domains in the technological landscape, with the potential to make work and transportation safer and more efficient. Since both robots and self-driving cars need to accurately perceive their surroundings, 3D object detection methods are an active area of study. Most 3D object detection methods employ lidar sensors to create 3D point clouds of their environment. Simply put, lidar sensors use laser beams to rapidly scan and measure the distances of objects and surfaces around the source. However, using lidar data alone can lead to errors due to the high sensitivity of lidar to noise, especially in adverse weather conditions like during rainfall.

To tackle this issue, scientists have developed multi-modal 3D object detection methods that combine 3D lidar data with 2D RGB images taken by standard cameras. While the fusion of 2D images and 3D lidar data leads to more accurate 3D detection results, it still faces its own set of challenges, with accurate detection of small objects remaining difficult. The problem mainly lies in properly aligning the semantic information extracted independently from the 2D and 3D datasets, which is hard due to issues such as imprecise calibration or occlusion.

Against this backdrop, a research team led by Professor Hiroyuki Tomiyama from Ritsumeikan University, Japan, has developed an innovative approach to make multi-modal 3D object detection more accurate and robust. The proposed scheme, called ‘Dynamic Point-Pixel Feature Alignment Network’ (DPPFA−Net), is described in its paper published in IEEE Internet of Things Journal on 3 November 2023.

The model comprises an arrangement of multiple instances of three novel modules: the Memory-based Point-Pixel Fusion (MPPF) module, the Deformable Point-Pixel Fusion (DPPF) module, and the Semantic Alignment Evaluator (SAE) module. The MPPF module is tasked with performing explicit interactions between intra-modal features (2D with 2D and 3D with 3D) and cross-modal features (2D with 3D). The use of the 2D image as a memory bank reduces the difficulty in network learning and makes the system more robust against noise in 3D point clouds. Moreover, it promotes the use of more comprehensive and discriminative features.

In contrast, the DPPF module performs interactions only at pixels in key positions, which are determined via a smart sampling strategy. This allows for feature fusion in high resolutions at a low computational complexity. Finally, the SAE module helps ensure semantic alignment between both data representations during the fusion process, which mitigates the issue of feature ambiguity.

The researchers tested DPPFA−Net by comparing it to the top performers for the widely used KITTI Vision Benchmark. Notably, the proposed network achieved average precision improvements as high as 7.18% under different noise conditions. To further test the capabilities of their model, the team created a new noisy dataset by introducing artificial multi-modal noise in the form of rainfall to the KITTI dataset. The research team say the results show that the proposed network performed better than existing models not only in the face of severe occlusions but also under various levels of adverse weather conditions. “Our extensive experiments on the KITTI dataset and challenging multi-modal noisy cases reveal that DPPFA-Net reaches a new state-of-the-art,” remarked Prof. Tomiyama.

Notably, there are various ways in which accurate 3D object detection methods could improve our lives. Self-driving cars, which rely on such techniques, have the potential to reduce accidents and improve traffic flow and safety. Furthermore, the implications in the field of robotics should not be understated. “Our study could facilitate a better understanding and adaptation of robots to their working environments, allowing a more precise perception of small targets,” explained Prof. Tomiyama. “Such advancements will help improve the capabilities of robots in various applications.”

Another use for 3D object detection networks is the pre-labeling of raw data for deep-learning perception systems. This would greatly reduce the cost of manual annotation, accelerating developments in the field, according to the team.